Accountable: zkTLS and beyond

How zkTLS Brings Verifiable Trust to Web Data and Powers Accountable's Privacy-First Credit Network

➡ What is zkTLS?

zkTLS is a new technology that empowers applications to prove data integrity and source identity for any content retrieved from websites using TLS, in short making HTTPS connections verifiable.

The most interesting use cases showing its power are those in which private credentials are used to retrieve data from a server (be it bank balances, emails, social media and so on), some claims are made about that private data and sharing the credentials to have a second party attest those claims is not possible. Here zkTLS comes to the rescue, enabling those attestations to be done by a second party without it getting the credentials or ever seeing the private data. This creates huge opportunities by bringing trust in data without the need of changing the existing infrastructure, thus creating new ways of bridging trusted Web2 data into the Web3 world.

⚙ How does it work?

There are two approaches, one that requires re-routing all traffic through a proxy, and an MPC based solution where another party is involved in the protocol. The backbone of most MPC based zkTLS implementations is TLSNotary, a project with a long history going back 10 years and recently rewritten with modern cryptography by a team backed by the Ethereum Foundation.

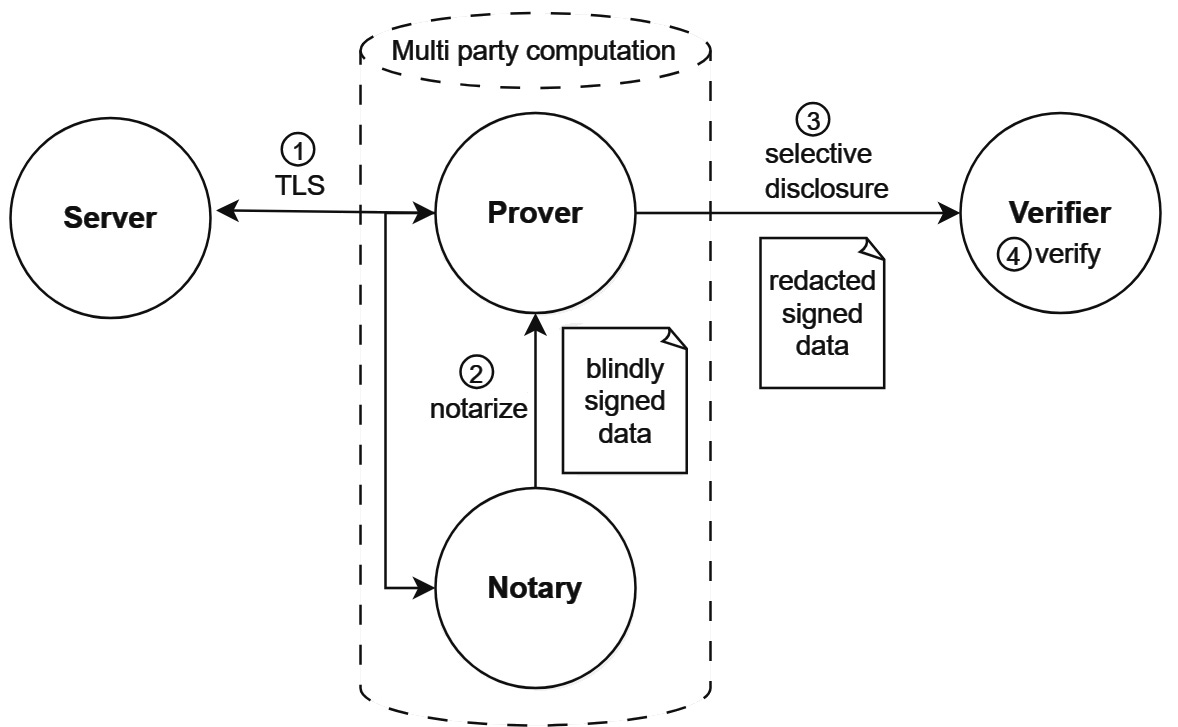

TLSNotary creates cryptographic proofs of authenticity for any data on the web, enabling privacy-preserving data provenance. It does that by changing the TLS connection from being a dialogue between a receiver (usually called the Prover) and a Server, to a three-party protocol. In this protocol, the Prover, together with a third party (called Verifier), use multi-party computing to act as the receiving endpoint in the communications with the Server. The Verifier is involved in the trusted connection setup, making sure that the source of the content is indeed the one claimed by verifying its certificates, as well as through the whole TLS session, generating commitments for the data in a multi-party computation and guaranteeing for the retrieval process, without seeing the actual data.

To make the verification process non-interactive and proofs portable, as well as take the burden of the heavy computations, a Notary can act as a general-purpose Verifier and be part of the MPC TLS. This Notary can sign attestations of the generated commitments, enabling offline verification and any number of checks done later by other verifiers.

It’s important that the TLS communication involves three parties and makes use of garbled circuits, oblivious transfer and other cryptographic primitives, such that the data exchange can happen only if the Prover and Verifier work together, while the Server sees no difference compared to a regular TLS connection. Another relevant point is that the traffic is encrypted and getting the decrypted content in this setup is possible for the Prover only while having the Verifier in the loop, and this happens without revealing the plaintext to the Verifier. This is the small detail of paramount importance: the Verifier does not see the data being retrieved unless the Prover chooses so, but it’s still able to certify the process, making zkTLS disruptive. Parts of the content can be redacted, allowing the Prover to selectively disclose data to the Verifier if desired. Then zero-knowledge proofs can be generated for the redacted parts, enabling the Prover to make statements about the data while deriving trust from the notary and the TLS protocol and fully maintain privacy.

Teams like VLayer, Primus, Opacity, zkPass, Reclaim Protocol, Eternis, Pluto, DECO, clique.social, or Gandalf Network are pushing the boundaries of what’s possible today by building zkTLS solutions with various approaches, all with trustability in mind, which is pretty much needed in a world where generating content with AI becomes cheap and trivial.

🔒 Why Accountable needs zkTLS?

Building a permissioned network for everything credit related and trying to restart un(der)collateralized lending on healthy foundations has trust at its core. Accountable has a privacy-first approach, with deploys of our software done on premises or on people’s private cloud. It’s a decentralized protocol to be at the heart of crypto credit, while keeping all the sensitive data private, but we all know that this data needs to be trusted somehow.

We make no compromise on security, this being the reason why Accountable is not a SaaS model: borrowers and lenders, as well as other actors, own their data, we are not a party that stores it for them and we never see their private information. Because of this approach, we need to have means to trust the data our software works with and passes around in a peer-to-peer fashion. That’s why we use trusted connectors to retrieve balances from sources (be it custodians, CeFi exchanges, banks, on-chain), that’s why we run our node and connectors in secure enclaves using SGX, and that’s why we need zkTLS. It allows us, for example, to prove that certain balances were received from a source who’s indeed the one claimed to be, and that those balances were not tampered with. In addition to allowing us to run on untrusted hardware, it offers both integrity and source identity proofs, elevating trust in the reports Accountable generates to a very high level.

At Accountable, we view verifiability as a spectrum. We recognize that it is not possible to transition from mere claims to ZK proof-backed numbers overnight. Therefore, we aggregate data with varying levels of verifiability and report the results accordingly. Verifiability becomes a new risk dimension that people can monitor over time. Our goal is to increase verifiability by all means possible, ultimately achieving ZK-backed "truth." zkTLS is a crucial tool in reaching this objective.

🔐 Why not just zkTLS?

Some might argue that the guarantees that can be obtain with zkTLS are enough. Why doesn’t Accountable exclusively use it? Well, there are several reasons for it.

First, there are concerns related to security rules that need to be enforced by entities running Accountable. The inclusion of a foreign node in the trusted connection setup phase is not always desirable. One way to mitigate this issue is by running the TLSNotary node on the lender side (case in which the verifier and the notary is the same) or by another trusted and regulated party. However, this approach also has its drawbacks, and some might argue that it would be even better if an external machine weren’t necessary at all.

Then there’s the protocol risk: what if there’s a vulnerability in the zkTLS implementation/TLSNotary and the data can be sniffed? It might be the case there is no vulnerability lying around and a future implementation will stand even a quantum attack, but protocol risk remains something that cannot be discarded and needs to be accounted for.

Another issue is that the trust obtained via zkTLS is inherently trust via consensus. In the case of MPC implementations, this trust is obtained without having the other party seeing the raw data, so privacy is preserved, but it is still relying on another entity vouching for the claims. Even more, the MPC phase is a two-party computation, so it’s a single other verifier involved in the protocol, making the system theoretically vulnerable to collusion. There are various ways to alleviate this concern, be it reputation systems, random selection or multiple retrieval rounds (when the content does not change), but any solution is weaker than trust from source, which is definitely the ultimate thing we should aim for.

✨ The Future

For these reasons we believe that there is yet another step, beyond zkTLS: Signed APIs.

zkTLS is a superb piece of engineering and already a huge leap forward, but the future will be about enabling self-sovereign provable aggregations and inheriting trust from source. And we believe that our approach or something similar to it will happen one way or another, all valuable data will have verifiability levels and cryptographic proofs attached to it in the near future.

This can be easily achieved, a hash of the content (ideally zk-friendly and for a normalized form) and a signature can become the equivalent of a "lock icon for data" that would make possible building verifiable aggregations by the recipients and inherit trust from source for derived data. And this can also solve the technical part of the non-repudiation problem. Naturally, this requires changes at the information originator, and this is the crux of the problem. Aligning people and changing how things work is always difficult and people are reluctant updating parts that are already functional. That’s why we make it easy, transitioning to signed APIs does not require sources to change anything in their APIs or the content they deliver, it’s just adding timestamps, hashes and signatures in the HTTP reply headers.

zkTLS helps as a very important intermediary step, allowing high levels of trust without changing the source behavior in any way. But people will start seeing the benefits of having identity and integrity proofs for data and they will implement it, as it’s a natural direction and the true web3 approach. Especially in problem spaces like the one Accountable is trying to solve, where this change would come with huge benefits not just for transparency but also with tangible financial gains, solving the information asymmetry and unlocking un(der)collateralized credit, which is an important pillar for growth.

The ongoing work done by the Coalition for Content Provenance and Authenticity (C2PA) and the adoption of the Verifiable Credentials standard by W3C are already laying the foundation for this. It will start with critical private data like Accountable is handling, but it will not end there: this will have a similar adoption like HTTP to HTTPS, first for valuable data like financial and medical records, then it will become widespread with the help of the tech giants when decision factors will get over their ZK and blockchain tech aversion, then at some point search engines will start penalizing "untrusted content" with no cryptographic data lineage, and finally regulations will make it omnipresent. Cutting edge stuff like ZKML would also benefit from this approach, since it's not enough to prove that your model was trained "properly", you also need to have proofs for the data you use in that training. But that's in the future, right now ZKML is still tackling the inference phase.

Changing the ecosystem and pushing towards signed APIs will obviously be gradual and require aligning stakeholders, but we believe the kind of information Accountable is handling is sensitive and valuable and we can prove that this approach works, bringing benefits to the players and hopefully be the first niche ecosystem to change data flows for the better. The world gets changed one step at a time.